Microsoft is known for their innovations and creations, this will be nothing new. More than a year back Microsoft applied for a number of patents related to touchscreen gestures on a tablet. Many of them concern a dual-screen device, conjuring images of the once highly-anticipated Courier slate. The others focus mainly on bezel gestures. Those patents have gone public now, though they have not in fact been granted yet.

What’s new this time? Here we analyze:

OFF-SCREEN GESTURES TO CREATE ON-SCREEN INPUT:

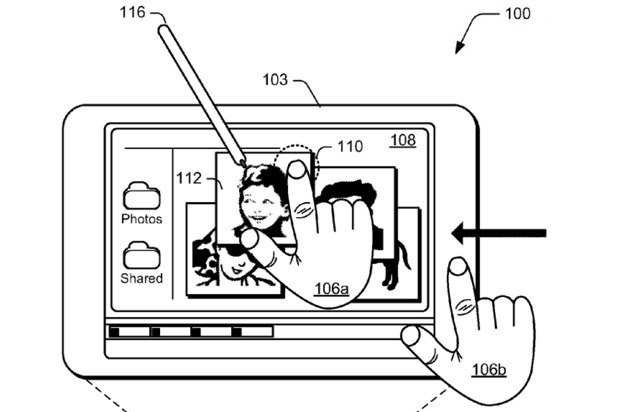

The first and the most interesting one. We’ve seen something similar to this on the BlackBerry PlayBook. To wake the device up you slide your finger from the bottom bezel up into the screen. Multi-tasking requires the same gesture but from the sides of the slate into the screen. Here it basically brings the bezel into the equation as far as gestures go. The patent provides for single-touch gestures, multi-finger same-hand gestures, and multi-finger different-hand gestures, all of which can operate along the bezel moving from the inside-out, or the outside-in. Now all Microsoft needs is to multi-toe different-foot gestures and the bezel is theirs. There’s also mention of bezel gestures inciting a drop-down menu.

RADIAL MENUS WITH BEZEL GESTURES:

We have another bezel-related patent, this time concerned with Radial menus. It describes a way to access, navigate, and use a radial menu on the screen through use of bezel gestures. We haven’t seen a whole lot of radial/pie menus except on the Microsoft Surface and a little taste of one in Honeycomb’s browser. The Honeycomb pie menu works by placing half a finger on the screen, half on the bezel, although the screen is the only part of the device receiving the input.

MUTLI-FINGER GESTURES:

The last of bezel patent. This one is indeed very similar to the first up there, but covers exactly what the name implies: multi-finger gestures. It also extends the gestures to use on a device with multiple screens. This one also covers the use of multi-finger gestures associated with one or many exposable drawers which can be customized

MULTI-SCREEN DUAL TAP GESTURE

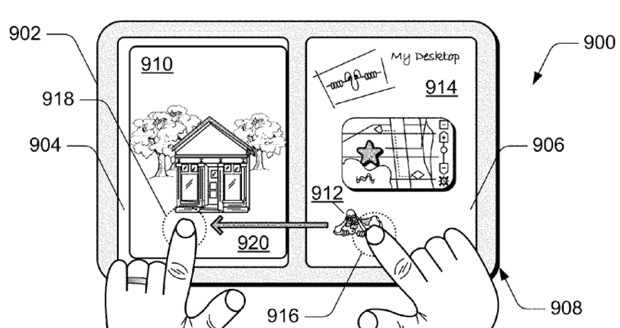

Embodiments of a multi-screen dual tap gesture are described. In various embodiments, a first tap input to a displayed object is recognized at a first screen of a multi-screen system. A second tap input to the displayed object is recognized at a second screen of the multi-screen system, and the second tap input is recognized approximately when the first tap input is recognized. A dual tap gesture can then be determined from the recognized first and second tap inputs.

MULTI-SCREEN PINCH AND EXPAND GESTURES

Embodiments of multi-screen pinch and expand gestures are described. In various embodiments, a first input is recognized at a first screen of a multi-screen system, and the first input includes a first motion input. A second input is recognized at a second screen of the multi-screen system, and the second input includes a second motion input. A pinch gesture or an expand gesture can then be determined from the first and second motion inputs that are associated with the recognized first and second inputs.

MULTI-SCREEN BOOKMARK HOLD GESTURE

Embodiments of a multi-screen bookmark hold gesture are described. In various embodiments, a hold input is recognized at a first screen of a multi-screen system, and the hold input is recognized when held in place proximate an edge of a journal page that is displayed on the first screen. A motion input is recognized at a second screen of the multi-screen system while the hold input remains held in place. A bookmark hold gesture can then be determined from the recognized hold and motion inputs, and the bookmark hold gesture is effective to bookmark the journal page at a location of the hold input on the first screen.

MULTI-SCREEN HOLD AND PAGE-FLIP GESTURE

Embodiments of a multi-screen hold and page-flip gesture are described. In various embodiments, a hold input is recognized at a first screen of a multi-screen system, and the hold input is recognized when held to select a journal page that is displayed on the first screen. A motion input is recognized at a second screen of the multi-screen system, and the motion input is recognized while the hold input remains held in place. A hold and page-flip gesture can then be determined from the recognized hold and motion inputs, and the hold and page-flip gesture is effective to maintain the display of the journal page while one or more additional journal pages are flipped for display on the second screen .

MULTI-SCREEN HOLD AND TAP GESTURE

Embodiments of a multi-screen hold and tap gesture are described. In various embodiments, a hold input is recognized at a first screen of a multi-screen system, and the hold input is recognized when held to select a displayed object on the first screen. A tap input is recognized at a second screen of the multi-screen system, and the tap input is recognized when the displayed object continues being selected. A hold and tap gesture can then be determined from the recognized hold and tap inputs.

Truly a lot to look forward to in the near future. These are few things which might as well revolutionize the tech world. But its going to be sometime before we lay our hands on it. Microsoft, rising high.

Thanks Krishna for nice information